Equivalence does not prove the superiority of full frame (FF) over crop sensor (APS-C, M43) cameras. In fact, equivalence shows that equivalent photos are equivalent in terms of angle of view, depth of field, motion blurring, brightness, etc…including QUALITY. Yes, equivalent photos are equivalent in quality.

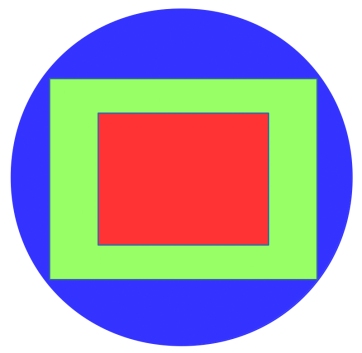

Refer to the illustration above where we have a full frame lens (BLUE) on a full frame sensor (GREEN). Some full frame sensors are now capable of shooting in crop mode (APS-C) where only the area in RED is used. When a crop sensor LENS is used on a full frame sensor, only the area in RED is illuminated and the rest of the areas in GREEN are in complete darkness and therefore do not contribute to light gathering. This is also true when the full frame is forced to shoot in crop mode with a full frame lens; the camera automatically crops the image to the area in RED and the rest of the areas in GREEN are thrown away.

As per the illustration above, we can see that the central half of the full frame sensor is really just an APS-C sensor. If indeed, a crop sensor is inferior in terms of light gathering then logic will tell us that every center of a full frame shot will be noisier than the rest of the frame. We know this is not true. The light coming in from the lens spreads evenly throughout the entire frame. Total light is spread over total area. As a matter of fact, the central half is the cleanest because lenses are not perfect and become worse as you move away from the center.

Now suppose we have a full frame 50mm lens in front of a full frame sensor. Notice that the crop mode area (RED) does not capture the entire image that is projected by the 50mm lens. The angle of view is narrower than full frame (GREEN). There are several ways we can capture the entire 50mm view while using crop mode:

- move backward

- use a wider lens (approx 35mm)

Both methods allow the RED area to capture more of the scene. A wider scene means more light is gathered. It means that if we force the RED area (APS-C) to capture exactly the same image as the GREEN area (FF) we will be forced to capture more light! More light means less noise! In equivalent images, APS-C is actually cleaner than full frame!!!

For example, if we go with option #2 using a wider lens, equivalent photos would be something like this:

RED (APS-C): 35mm, 1/125, f/5.6, ISO 100

GREEN (FF): 50mm, 1/125, f/8, ISO 200

This is exactly what the equivalence theory proposes. The difference in f-stop is to ensure that they have the same depth of field given the same distance to subject. The faster f-stop for APS-C (f/5.6) guarantees that TWICE more light is gathered. Notice that the full frame is now forced to shoot at a higher ISO to compensate for the lesser light coming in due to a narrower aperture given by f/8. So if we are to use the same camera for both shots, say, a Nikon D810 to shoot in normal mode with a 50mm lens and in crop mode using a 35mm lens, the crop mode image will be noticeably better. In equivalent photos, crop mode comes out one stop better. In equivalent photos, the smaller sensor results in BETTER quality!!!

The story does not end here though. The full frame shot has twice the area of the crop mode shot. If both images are printed at the same size, the crop mode shot will need to be enlarged more than the full frame shot. Enlargement results in loss of quality and the full frame image will have an advantage over the crop mode image. Whatever the crop mode shot gained by the increase in gathered light is lost by a proportional amount during enlargement. In the end, both full frame and crop mode shots result in exactly THE SAME print quality!!!

Bottomline, full frame will not give you cleaner images than crop sensors, assuming that they are the same sensor technology (e.g. D800, D7000, K5). They will result in equivalent print quality if forced to shoot equivalent images.

Full frame superiority busted!

Interesting how you’ve moved from “debunking equivalence” to “using equivalence” 🙂

Noise is almost entirely a function of how much light is collected, i.e. by how much light the whole image is drawn with:

– If you change the exposure time, you change the number of photons captured

– If you change the aperture number, you change the number of photons captured

– if you crop part of the image out, you change the number of photons captured (you also need to use different focal length to capture the same scene)

If there is no lack of light and the idea is to capture the same scene with maximum quality, then the system which collects most light will have the best quality – for example FF: 150mm focal length, f/15, 1/1000s and ISO 100 vs. APS-C 100mm, f/10, 1/2250s and ISO 100. The shutter speed difference is because the bigger sensor has 2,25 times the size, thus it can collect 2,25 times more light without over-exposure.

Additionally when one uses a smaller format the image drawn by the lens will be enlarged more to get the same output size with larger format, thus all the aberrations of it will also be enlarged more. This is why for example large format lenses are quite simple and not too good in quality – they don’t need to be as the image is enlarged very little. The miniscule lenses of mobile phone cameras on the other hands are extraordinary in quality – they have to be because the image will be enlarged lots.

Oh, and moving backwards in no way changes the field of view even though you wrote it does. Moving backwards creates a different image with different perspective.

You really should try to educate yourself, like for example reading stuff from https://aberration43mm.wordpress.com/comparing-camera-formats/ or some other such place.

You seem to forget that noise is only affected by how much light is gathered PER SENSEL. This is quite obvious. For example in a single image, parts that are bright look clean while parts that are dark are noisy. Looking only at total light is very stupid. Suppose that you are shooting a black and white checker board the dark squares will always be noisier than that the white squares. Even if you shot it with the largest sensor camera you could find, the dark squares will always be noisy. Ergo, it doesn’t matter if your sensor is large or small, dark parts will always be noisy by exactly the same level. What matters is senSEL size and never senSOR size.

You need to understand very basic photography. Photography was established way before digital sensors. A 35mm Kodak Ektar has exactly the same property as an 8×10 Ektar. Same grain, meaning same (digital) noise. Of course 8×10 sheet film is capable of bigger enlargements but that is not the point. An enlarged 35mm film will still look clean if viewed from farther away. Noise due to enlargement is no different to noise due to viewing distance. Viewing distance does not change the property of film therefore enlargement does not change the inherent property of the image. Therefore film size or sensor size has got nothing to do with SNR.

“for example FF: 150mm focal length, f/15, 1/1000s and ISO 100 vs. APS-C 100mm, f/10, 1/2250s and ISO 100. The shutter speed difference is because the bigger sensor has 2,25 times the size, thus it can collect 2,25 times more light without over-exposure.”

ROFL! LMAO!

Exposure is total light over total area! Just because you have a larger sensor does not mean it is more difficult to overexpose it. F/5.6 at 1/125 ISO 100 is EXACTLY the same regardless of sensor size.

That is why in film, a 35mm and 8×10 Ektar are exposed and developed in exactly the same way.

Please learn how to shoot. Too much gear talk leads to stupidity like yours.

>> You seem to forget that noise is only affected by how much light is gathered PER SENSEL.

“Sensel” is not a proper word. You mean pixel or photodiode. Anyhow, the noise of an image depends on all the light the image is drawn. This has to consider all pixels, not just one. One pixel doesn’t tell use anything about image noise, just about pixel noise (and even that by inaccurate amount unless wel sample the same pixel site multiple times using separate exposures).

>>This is quite obvious. For example in a single image, parts that are bright look clean while parts that are dark are noisy.

The SNR of bright parts is of course higher.

>> Looking only at total light is very stupid.

If you’re interested in image quality you look at the whole image, not at a pixel. Did you not have a look at this image: https://aberration43mm.files.wordpress.com/2015/02/compared.png

Can you explain to me why in that image the color batches appear to have dfferent amounts of noise even though the pixels used were identical for each photo?

>> Suppose that you are shooting a black and white checker board the dark squares will always be noisier than that the white squares.

They will always appear noisier to use. In reality there is more photon shot noise the more light there is, but even more signal, thus signal to noise ratio is higher, thus the more light, the less noisy the result appears to our eyes/brain.

>> Even if you shot it with the largest sensor camera you could find, the dark squares will always be noisy.

Noisy compared to what? https://aberration43mm.files.wordpress.com/2015/02/compared.png shows clearly that smaller sensor causes more noise with identical exposure with identical pixels.

With the same exposure (which means same ambient light, exposure time and aperture number, nothing more) a bigger sensor captures more light than a smaller sensor.

>> Ergo, it doesn’t matter if your sensor is large or small, dark parts will always be noisy by exactly the same level.

Not according to evidence, including https://aberration43mm.files.wordpress.com/2015/02/compared.png – why not repeat the experient or tell why it’s wrong?

>> What matters is senSEL size and never senSOR size.

There is no such thing as sensel – really, it’s not used by anyone in the image sensor community. Let me quote the inventor of CMOS active pixel sensor, Eric Fossum (http://www.dpreview.com/forums/post/54547179): “OK, first thing is, quit calling them sensels! This is not a word used in the image sensor community. It is not a bad word, but just identifies you instantly as an outsider. “.

Please explain the image I’ve linked to and why it disagrees with your opinion.

>> You need to understand very basic photography.

I do.

>> Photography was established way before digital sensors. A 35mm Kodak Ektar has exactly the same property as an 8×10 Ektar. Same grain, meaning same (digital) noise. Of course 8×10 sheet film is capable of bigger enlargements but that is not the point.

Actually this is where you fail. The 8×10 sheet will be enlarged *LESS* than 135-film to get the same output size. This is the reason why with the same output size the 8×10 will produce much higher image quality.

How much you can enlarge from noise point of view is the same for each case – the output size will be different with the same enlargement factor. For example to create a print with diagonal size of 300mm (12 inches) you don’t enlarge the 8×10 film at all, but you need to enlarge the 135-film by factor of 7 (on each axis, thus make the output 50 times larger than the film areawise) !!!

>> An enlarged 35mm film will still look clean if viewed from farther away.

When you compare you look the print from equal distances regardless of capturing device.

>> Noise due to enlargement is no different to noise due to viewing distance.

BINGO!!! This is where we agree. Now, if I want to print a picture 300mm in diagonal, how much I need to enlarge the 8×10 film image??? What about 135-film image? Different enlargement, different noise.

>> Viewing distance does not change the property of film therefore enlargement does not change the inherent property of the image. Therefore film size or sensor size has got nothing to do with SNR.

But you just said that “noise due to enlargement is no different to noise due to viewing distance”. Thus you implied that there is difference in noise because of enlargement, but now you take a 180 degree turn back! Wow.

Why are the two color batches not identical from noise point of view in https://aberration43mm.files.wordpress.com/2015/02/compared.png ??? That is hard evidence against your argument.

>>>>“for example FF: 150mm focal length, f/15, 1/1000s and ISO 100 vs. APS-C 100mm, f/10, 1/2250s and ISO 100. The shutter speed difference is because the bigger sensor has 2,25 times the size, thus it can collect 2,25 times more light without over-exposure.”

>>ROFL! LMAO!

No need to be impolite.

>> Exposure is total light over total area!

No it’s not. Exposure is the combination of ambient light, exposure time and aperture number. https://en.wikipedia.org/wiki/Exposure_%28photography%29

>> Just because you have a larger sensor does not mean it is more difficult to overexpose it. F/5.6 at 1/125 ISO 100 is EXACTLY the same regardless of sensor size.

Yes. But if you use the same exposure parameters on different formats, the bigger format will collect more light. If you want to collect the same light (“make the same output quality”), then the bigger format will use a larger f-number to match the total light (and depth of field). And if you do that you will have a smaller exposure, thus less chance of over exposure.

Read this and hopefully you will understand that considering only total light is very short sighted:

What matters is correct exposure. Total light over total area. And please do not lecture me on exposure. Total light over total area is controlled by f-stop (not aperture) and shutter speed. Learn about that here:

If you still don’t get it then nothing ever will. And if you ever post another reply that is obviously in those blog posts then I will totally ignore them.

>> You seem to forget that noise is only affected by how much light is gathered PER SENSEL.

“Sensel” is not a proper word. You mean pixel or photodiode. Anyhow, the noise of an image depends on all the light the image is drawn. This has to consider all pixels, not just one. One pixel doesn’t tell use anything about image noise, just about pixel noise (and even that by inaccurate amount unless wel sample the same pixel site multiple times using separate exposures).

>>This is quite obvious. For example in a single image, parts that are bright look clean while parts that are dark are noisy.

The SNR of bright parts is of course higher.

>> Looking only at total light is very stupid.

If you’re interested in image quality you look at the whole image, not at a pixel. Did you not have a look at this image: https://aberration43mm.files.wordpress.com/2015/02/compared.png

Can you explain to me why in that image the color batches appear to have dfferent amounts of noise even though the pixels used were identical for each photo?

>> Suppose that you are shooting a black and white checker board the dark squares will always be noisier than that the white squares.

They will always appear noisier to use. In reality there is more photon shot noise the more light there is, but even more signal, thus signal to noise ratio is higher, thus the more light, the less noisy the result appears to our eyes/brain.

>> Even if you shot it with the largest sensor camera you could find, the dark squares will always be noisy.

Noisy compared to what? https://aberration43mm.files.wordpress.com/2015/02/compared.png shows clearly that smaller sensor causes more noise with identical exposure with identical pixels.

With the same exposure (which means same ambient light, exposure time and aperture number, nothing more) a bigger sensor captures more light than a smaller sensor.

>> Ergo, it doesn’t matter if your sensor is large or small, dark parts will always be noisy by exactly the same level.

Not according to evidence, including https://aberration43mm.files.wordpress.com/2015/02/compared.png – why not repeat the experient or tell why it’s wrong?

>> What matters is senSEL size and never senSOR size.

There is no such thing as sensel – really, it’s not used by anyone in the image sensor community. Let me quote the inventor of CMOS active pixel sensor, Eric Fossum (http://www.dpreview.com/forums/post/54547179): “OK, first thing is, quit calling them sensels! This is not a word used in the image sensor community. It is not a bad word, but just identifies you instantly as an outsider. “.

Please explain the image I’ve linked to and why it disagrees with your opinion.

There was photography before digital sensors existed. Sensel is sensor pixels as opposed to pixels which is printed or image pixels. The noise in pixels changes depending on print size and viewing distance. The noise in sensels are fixed at capture time.

Sensels or film grain, the semantics don’t matter if it is a well understood concept. Unfortunately you do not understand the difference between sensel and pixel. And name dropping Eric Fossum does not make you any more credible. Knowing about digital sensors does not make you a photographer. Knowing just about the sensor does not guarantee you understand photography as a whole. You and Eric do not understand photography. I feel sorry for bringing Eric in this argument but both of you are brainwashed by the idiots in dpreview.

>> You need to understand very basic photography.

I do.

>> Photography was established way before digital sensors. A 35mm Kodak Ektar has exactly the same property as an 8×10 Ektar. Same grain, meaning same (digital) noise. Of course 8×10 sheet film is capable of bigger enlargements but that is not the point.

Actually this is where you fail. The 8×10 sheet will be enlarged *LESS* than 135-film to get the same output size. This is the reason why with the same output size the 8×10 will produce much higher image quality.

How much you can enlarge from noise point of view is the same for each case – the output size will be different with the same enlargement factor. For example to create a print with diagonal size of 300mm (12 inches) you don’t enlarge the 8×10 film at all, but you need to enlarge the 135-film by factor of 7 (on each axis, thus make the output 50 times larger than the film areawise) !!!

>> An enlarged 35mm film will still look clean if viewed from farther away.

When you compare you look the print from equal distances regardless of capturing device.

>> Noise due to enlargement is no different to noise due to viewing distance.

BINGO!!! This is where we agree. Now, if I want to print a picture 300mm in diagonal, how much I need to enlarge the 8×10 film image??? What about 135-film image? Different enlargement, different noise.

>> Viewing distance does not change the property of film therefore enlargement does not change the inherent property of the image. Therefore film size or sensor size has got nothing to do with SNR.

But you just said that “noise due to enlargement is no different to noise due to viewing distance”. Thus you implied that there is difference in noise because of enlargement, but now you take a 180 degree turn back! Wow.

Why are the two color batches not identical from noise point of view in https://aberration43mm.files.wordpress.com/2015/02/compared.png ??? That is hard evidence against your argument.

>>>>“for example FF: 150mm focal length, f/15, 1/1000s and ISO 100 vs. APS-C 100mm, f/10, 1/2250s and ISO 100. The shutter speed difference is because the bigger sensor has 2,25 times the size, thus it can collect 2,25 times more light without over-exposure.”

>>ROFL! LMAO!

No need to be impolite.

>> Exposure is total light over total area!

No it’s not. Exposure is the combination of ambient light, exposure time and aperture number. https://en.wikipedia.org/wiki/Exposure_%28photography%29

>> Just because you have a larger sensor does not mean it is more difficult to overexpose it. F/5.6 at 1/125 ISO 100 is EXACTLY the same regardless of sensor size.

Yes. But if you use the same exposure parameters on different formats, the bigger format will collect more light. If you want to collect the same light (“make the same output quality”), then the bigger format will use a larger f-number to match the total light (and depth of field). And if you do that you will have a smaller exposure, thus less chance of over exposure.

>> That is why in film, a 35mm and 8×10 Ektar are exposed and developed in exactly the same way.

Sure, but with the same exposure parameters you’ll get different depth of field and noise on when printed to the same output size. If you want to create the same output, then you’ll use a much smaller exposure on the larger format.

>> Please learn how to shoot. Too much gear talk leads to stupidity like yours.

Why so hostile and insulting? Why not look at evidece?

If you were right, this image (https://aberration43mm.files.wordpress.com/2015/02/compared.png) would look different.

That example disproves your point while proves mine. Either there is something wrong in which case I’d be delighted to know what, or you’re just wrong.

You can repeat the test yourself to see if the glove fits or not. How it was done seems to be explained here: https://aberration43mm.wordpress.com/2015/04/26/sensor-size-and-image-quality-examples/

Now, why is looking at evidence stupidity and ignoring it not?

Sorry but this is so funny so I can’t help but reply. The noise in a smaller sensor is no different from a larger sensor as long as they are the same technology. Have a look at the SCREEN SNR of the ancient and tiny m43 Olympus E-M5 and compare it with the full frame latest and greatest Canon 5DS. They are exactly the same.

Of course when you enlarge the m43 to stupid 50Mp you will enlarge everything including noise. But then if I view it from farther away I will cancel this apparent increase in noise. So what is your point? Did I change the inherent image noise by moving forward and back? You seem to believe that there is SNR magic in my legs lol!!!

>>>>“for example FF: 150mm focal length, f/15, 1/1000s and ISO 100 vs. APS-C 100mm, f/10, 1/2250s and ISO 100. The shutter speed difference is because the bigger sensor has 2,25 times the size, thus it can collect 2,25 times more light without over-exposure.”

>>ROFL! LMAO!

No need to be impolite.

>> Exposure is total light over total area!

No it’s not. Exposure is the combination of ambient light, exposure time and aperture number. https://en.wikipedia.org/wiki/Exposure_%28photography%29

>> Just because you have a larger sensor does not mean it is more difficult to overexpose it. F/5.6 at 1/125 ISO 100 is EXACTLY the same regardless of sensor size.

Yes. But if you use the same exposure parameters on different formats, the bigger format will collect more light. If you want to collect the same light (“make the same output quality”), then the bigger format will use a larger f-number to match the total light (and depth of field). And if you do that you will have a smaller exposure, thus less chance of over exposure.

>> That is why in film, a 35mm and 8×10 Ektar are exposed and developed in exactly the same way.

Sure, but with the same exposure parameters you’ll get different depth of field and noise on when printed to the same output size. If you want to create the same output, then you’ll use a much smaller exposure on the larger format.

Wrong again. The only thing affected by captured light is FILM GRAIN. Film enlargement or printing has absolutely got nothing to do with captured light. Printing is a POST-CAPTURE process.

I will repeat what I have said: the APPARENT NOISE caused by enlargement is no different to the APPARENT NOISE caused by viewing distance. Why not test this yourself: move forward and back and see if noise changes. It will.

So by your very broken logic, by moving forward and back I have changed the amount of light in the image even after I clicked the shutter. Isn’t that the most ridiculous claim?

That’s why DXOMark, measure the REAL SNR of sensors and plot them as 18% SCREEN SNR. What you full frame fan boys sell is the interpolated PRINT SNR which has got nothing to do with sensor performance.

For example if you compare a D4 and D800 with dxomark’s standard 8Mp (8×10) print then both sensors will come out “equal” even if the D4 is actually superior. This is easily proven because if the standardised print size was 16Mp, the D4 will come out better than the D800. So, if total light matters, why is it that the D4 vs D800 RELATIVE performance is different at different print sizes?!!! It should always be the same because total light has not changed but again at 8Mp they are equal but at 16Mp and even at 36Mp the D4 beats the D800.

The only conclusion is that PRINT SNR has got nothing to do with light. It’s got everything to do with SENSOR (SCREEN) SNR and IMAGE size (number of pixels) only.

I will not respond to your other comments until you get hit by a bigger cluebat.

>> Please learn how to shoot. Too much gear talk leads to stupidity like yours.

Why so hostile and insulting? Why not look at evidece?

If you were right, this image (https://aberration43mm.files.wordpress.com/2015/02/compared.png) would look different.

That example disproves your point while proves mine. Either there is something wrong in which case I’d be delighted to know what, or you’re just wrong.

You can repeat the test yourself to see if the glove fits or not. How it was done seems to be explained here: https://aberration43mm.wordpress.com/2015/04/26/sensor-size-and-image-quality-examples/

Now, why is looking at evidence stupidity and ignoring it not?

Try enlarging the bigger image by exactly the same proportion and you will see EXACTLY the same noise as that of the enlarged tiny sensor.

So, did the larger image suddenly lose some light by enlarging?

And if print size is indeed a gauge of total light then what happens if you print at a smaller size? Will the smaller print become brighter? Will it overexpose? Where does all the extra light go when you downsize? ROFL!!!

Can you see your broken logic? If you still don’t understand please leave. I have no intention of educating a brainwashed mind.

Very good post

Your Bottomline is wrong.

It is correct to say that equivalence needs to calculate equivalent focal length, aperture and ISO. As a result, you will get mostly identical results with different sensor sizes.

Using equivalence for ISO has the result, that the smaller sensor has comparable high ISO values and is actually able to compete in this area with the large sensor. On the other hand it shows that the smaller sensor is missing the low ISO values which are always used if enough light and time is available to get cleaner images.

I decided to use MFT because of the size and weight of the equipment but in terms of FF I’m using ISO 400-6400 (not 100-1600). In addition, FF-systems are offering larger lenses, gathering more light if you want or can live with shallow DOF.

Bottomline, full frame will give you cleaner images than crop sensors if you use low ISO settings and larger apertures which are not available on the small sensor camera.

I’ll respond to your wrong arguments later 🙂

Your Bottomline is wrong.

>>>

😦

>>>

It is correct to say that equivalence needs to calculate equivalent focal length, aperture and ISO. As a result, you will get mostly identical results with different sensor sizes.

>>>

Did you actually read and understand the article? If the same D810 shot in normal FF mode at f/8 and in crop mode at f/5.6 obviously the crop mode is letting more flux of photons in. More light means better SNR. The results are not identical at all. Crop mode equivalent image is better by a stop.

>>>

Using equivalence for ISO has the result, that the smaller sensor has comparable high ISO values and is actually able to compete in this area with the large sensor. On the other hand it shows that the smaller sensor is missing the low ISO values which are always used if enough light and time is available to get cleaner images.

>>>

Why is m43 missing the lower ISO values? The E-M1 native ISO is 100. AFAIK, full frame native ISO is the same.

Are you saying that if FF shoots at ISO 100 then m43 must shoot at the non-existent ISO 25? Who in their right mind would do that or why would anyone ever want that? As a m43 shooter I will shoot at ISO 100 and gain shutter speed.

The Nikon D7000, Pentax K5 and Nikon D800/810 all use the same sensor. ISO 100 in the D7000 is the same as D800. So what gives the D800 the ISO advantage?

>>>

I decided to use MFT because of the size and weight of the equipment but in terms of FF I’m using ISO 400-6400 (not 100-1600).

>>>

I have absolutely no problems shooting at ISO 400-6400 with my m43. I don’t know where you got the idea that it’s not possible.

Stop measurebating and start shooting.

>>>

In addition, FF-systems are offering larger lenses, gathering more light if you want or can live with shallow DOF.

>>>

You can’t gain something without giving up on something. Larger lenses are back breaking. Shallow DoF is a boring cliche. When you actually learn to shoot and you get over the shallow DoF honeymoon, you will understand what I mean.

>>>

Bottomline, full frame will give you cleaner images than crop sensors if you use low ISO settings and larger apertures which are not available on the small sensor camera.

>>>

Should I say your bottomline is wrong?

I suggest that you re-read the article and my other fine articles so you will understand more.

Thanks.

“Are you saying that if FF shoots at ISO 100 then m43 must shoot at the non-existent ISO 25? Who in their right mind would do that or why would anyone ever want that? As a m43 shooter I will shoot at ISO 100 and gain shutter speed.”

Of course I would do that. Everybody does. The lowest ISO gives the cleanest image. As soon as you can afford the longer shutter speed, you will use it. It’s just like with larger senors. As an FF shooter nobody uses ISO 400 by default to gain shutter speed if it’s not necessary. Everybody uses the lowest possible ISO.

Do your own math. ISO100 on m43 is equivalent to ISO 400 on FF. If you say no m43 shooter wants to use ISO 25 you must explain why FF-shooters like to use ISO 100. It’s exactly the same.

“Do your own math. ISO100 on m43 is equivalent to ISO 400 on FF. If you say no m43 shooter wants to use ISO 25 you must explain why FF-shooters like to use ISO 100. It’s exactly the same.”

😂😂😂 Nope. 😂😂😂

Bumping up the ISO does not change the exposure. Exposure is only affected by f-stop and shutter speed. ISO 100 and 400 are never the same. ISO 100 captures four times more photons.

No m43 shooter would want to shoot at ISO 25 because no such sensor exists in the first place.

Photographers will or must shoot at native ISO if they want to maximise dynamic range. Fake low ISO results in blown highlights. You lose DR.

You made my day.

It seems that you know some photograhic basics, but you are not able combine them in a proper way or to explain clearly what you mean.

Example: You have an Olympus E-M5 with 16 MP and a Nocticon 42,5mm/f1.2 on one side and an 21MP-EOS 5D MK II with the EF 85mm/f1.8 on the other, just for simplifying the math a bit.

There is an object, that is two metre away and the light is good for 1/100 s, f2.8 on ISO 200 on FF. If you shoot from the same distance and want to create the same DOF, you’ll have to shoot on the m43 with f1.4, compensating this with 1/200 or ISO 100. With the Nocticon this is possible, but if you have a lens that is not that fast, your only choice is to choose a faster shutter speed, loosing the advantage of lowering ISO. Additionally, ISO 100 on the E-M 5 is not native.

On the other end, the diffration blur starts to affect the picture beginning between f5.6 and f8, while FF will be affected starting beyond f11, all depending on image scale.

Now about using the image circle. If you’re using a FF or MF lens on m43, you’re using the the best part, right, but it’s like driving a Bugatti Veyron at 50mph. If you use a lens that was made for m43, you loose that advantage.

So nearly everything you wrote is academic.

Don’t get me wrong, I like my m43 very much, because it is handy and delivers good quality, but I also don’t want to miss my FF when shooting portraits or working low-light.

It seems that you know some photograhic basics, but you are not able combine them in a proper way or to explain clearly what you mean.

>>>

Nice intro 🙂

>>>

Example: You have an Olympus E-M5 with 16 MP and a Nocticon 42,5mm/f1.2 on one side and an 21MP-EOS 5D MK II with the EF 85mm/f1.8 on the other, just for simplifying the math a bit.

There is an object, that is two metre away and the light is good for 1/100 s, f2.8 on ISO 200 on FF.

>>>

1/100, f/2.8 at ISO 200 is EV9. Something I would never call “good light” but let’s carry on 🙂

>>>

If you shoot from the same distance and want to create the same DOF,

>>>

Wait, why would I want a shallow DoF in such kind of light? This light is equivalent to a city skyline at sundown. Firstly, your AF accuracy is now reduced due to lack of light and you want shallow DoF? If anything, you would want a greater DoF to ensure that you have your subject in focus. You are starting to get funny. But let’s continue…

>>>

you’ll have to shoot on the m43 with f1.4, compensating this with 1/200 or ISO 100.

>>>

Ok, I’ll take this. I now have a shutter speed advantage at a much cleaner ISO so why not 🙂

>>>

With the Nocticon this is possible, but if you have a lens that is not that fast, your only choice is to choose a faster shutter speed, loosing the advantage of lowering ISO.

>>>

Right you are. Note though that f/1.8 is quite common in m43 so it’s piece of cake.

>>>

Additionally, ISO 100 on the E-M 5 is not native.

>>>

Right you are so if I go ISO 200 then I gain more shutter speed. Bonus!!!

>>>

On the other end, the diffration blur starts to affect the picture beginning between f5.6 and f8,

while FF will be affected starting beyond f11, all depending on image scale.

>>>

Yes, but I am at f/1.8 so that shouldn’t be a problem, no?

>>>

Now about using the image circle. If you’re using a FF or MF lens on m43, you’re using the the best part, right, but it’s like driving a Bugatti Veyron at 50mph.

>>>

Now why is that? This is a very funny analogy. Here’s a better analogy:

If your car is small (sensor) you only need a smaller engine and less fuel (light) to get to a certain speed. So my m43 only needs lesser light to achieve the same exposure. To me that is an advantage especially with fuel prices soaring 🙂

>>>

If you use a lens that was made for m43, you loose that advantage.

>>>

What’s the point in having a 5.0 V12 in a VW Beetle? I can’t see your point here. If anything, having a big car would only make you look like you are compensating for something. ROFL!

>>>

So nearly everything you wrote is academic.

>>>

Hardly. I just gave you real-world examples

>>>

Don’t get me wrong, I like my m43 very much, because it is handy and delivers good quality, but I also don’t want to miss my FF when shooting portraits or working low-light.

>>>

Don’t get me wrong too. I own several m43, aps-c, ff and medium format cameras. I’m not biased. I’m informed.

Thanks for the entertaining comment 🙂

>>Something I would never call “good light”

I did not say it is good, it is good for … 😉

>>Wait, why would I want a shallow DoF in such kind of light?

Because you want to compare things by equalizing the parameters ?

As I spoke about a portrait lens, I took settings for a portrait in, ok, not-so-good light.

Additionally the fade of DOF changes by sensor size.

>>I now have a shutter speed advantage at a much cleaner ISO so why not

You have one advantage, either ISO or shutter speed.

>>Note though that f/1.8 is quite common in m43 so it’s piece of cake.

But what do you do if you have to compete a FF-pro-lens with f1.2 ? Or even the 50mm/f1.4 Planar ?

>>Yes, but I am at f/1.8 so that shouldn’t be a problem, no?

For the example I took, you’re right. But it is a fact, that diffraction blur depends on sensor size and I just wanted to point it out.

>>If your car is small (sensor) you only need a smaller engine and less fuel (light) to get to a certain speed. So my m43 only needs lesser light to achieve the same exposure. To me that is an advantage especially with fuel prices soaring

Partly right. To explain the missing part it is hard to keep the analogy. 😉

But I’ll try. You have tuned small car and a V8 big block. Both have the same power-to-wight ratio (sensor-to-light). For a race up to 60 mph you don’t have to shift on the big block, while you have to shift two times on the small car.

If you’re using only the center of a bigger image circle you get the best results, but if you reduce the image circle to gain a smaller lens, you buy it with all the problems a bigger sensor has with the bigger image circle.

>>I’m informed.

Me too. As I’m photographing for nearly 30 years; last 10 on professional level, but not as profession, because I want to keep it fun to me; I’ve seen many things coming and going.

I was never a fanboy of anything, but I learned some lessons.

One is, that size does matter in the fact of DOF as part of the image quality. The larger the area to fade, the smoother. You may recognize lower ISO, but just on the level of pixel peeping. If you print a picture on 80×60 cm the only difference on the regular viewing distance of about 1 m will be the look, the DOF, not if the picture was taken on higher ISO. Maybe you’ll see a faster shutter speed, due to a frozen moment, but that can be a pro as well as a con.

A second lesson, from the digital age, is, that there is a critical amount of pixels per sq mm sensor, that does not improve image quality, but decrease it.

I own, among others, a EOS 7D with 18 MP on APS-C, a E-M5 with 16 MP on m43 and a 5D Mk II with 21 MP on FF. The 7D has nearly 54k pixel/sq mm, the E-M5 nearly 71k and the 5D something above 24k. Between the 7D and the E-M5 there is no real difference in RAW, but somewhere between the 54k and the 24k there is a peak, where bigger pixels make a better picture. Similar to a gaussian distribution. Even the 80-MP-back fron Phase One has less than 37k.

I read some scientific articles about that phenomenon, the only thing I remember, was that on a specific size the single sensors interact due to micro reflection and refraction.

As technology enhances, the gaussian distribution may move and yes, there will be a time where future small-pixel-sensors will top the quality of older big-pixel-sensors, but not at the moment.

I did not say it is good, it is good for …😉

>>> Don’t backpedal now. Surely, that exposure reading was intentional. You wanted to force the lens to open up. Nobody shoots f/2.8 ISO 100 in good light.

Because you want to compare things by equalizing the parameters ?

>>> I thought you didn’t like my article because it was too academic. I gave you real world examples and now you want to be purely theoretical. Make up your mind already.

As I spoke about a portrait lens, I took settings for a portrait in, ok, not-so-good light.

Additionally the fade of DOF changes by sensor size.

>>> Obviously. But shallow DoF is a problem not a feature. You claiming to be a pro should know that. Shallow DoF cliche is for noobs.

You have one advantage, either ISO or shutter speed.

>>> Nope. I got both. You know why? Because m43 vs FF is two stops of difference. I could use one stop of ISO plus one stop of shutter speed. Very simple math really.

But what do you do if you have to compete a FF-pro-lens with f1.2 ? Or even the 50mm/f1.4 Planar ?

>>> You are being too academic again. You know too well (I assume) that f/1.2 is a PITA to focus. Shooting at f/1.2 is hit or miss, mostly miss.

For the example I took, you’re right. But it is a fact, that diffraction blur depends on sensor size and I just wanted to point it out.

>>> No arguments here but I totally avoided being academic.

Partly right. To explain the missing part it is hard to keep the analogy.😉

But I’ll try. You have tuned small car and a V8 big block. Both have the same power-to-wight ratio (sensor-to-light). For a race up to 60 mph you don’t have to shift on the big block, while you have to shift two times on the small car.

>>> But it is not a race. The goal is correct exposure. Light per unit area gathered. Same shutter speed and f-stop. We are talking about exactly the same exposure. In car analogy its about how much fuel it takes to get from point A to point B. Smaller car wins all the time.

If you’re using only the center of a bigger image circle you get the best results, but if you reduce the image circle to gain a smaller lens, you buy it with all the problems a bigger sensor has with the bigger image circle.

>>> Well not so fast. Fact is, smaller lenses are much much better quality than bigger lenses. It’s an engineering problem. iPhone and point and shoot lenses are much sharper than any full frame lens. They have to because they need to perform at their widest aperture. Fact.

Me too. As I’m photographing for nearly 30 years; last 10 on professional level, but not as profession, because I want to keep it fun to me; I’ve seen many things coming and going.

I was never a fanboy of anything, but I learned some lessons.

One is, that size does matter in the fact of DOF as part of the image quality. The larger the area to fade, the smoother. You may recognize lower ISO, but just on the level of pixel peeping. If you print a picture on 80×60 cm the only difference on the regular viewing distance of about 1 m will be the look, the DOF, not if the picture was taken on higher ISO. Maybe you’ll see a faster shutter speed, due to a frozen moment, but that can be a pro as well as a con.

>>> I’m noticing a common theme here. You seem to think that shallow DoF is a feature. While it can be used as a creative tool it is inherently a problem. It’s what you trade with the gain in light. Basic photography will always tell you that you trade light with DoF.

A second lesson, from the digital age, is, that there is a critical amount of pixels per sq mm sensor, that does not improve image quality, but decrease it.

I own, among others, a EOS 7D with 18 MP on APS-C, a E-M5 with 16 MP on m43 and a 5D Mk II with 21 MP on FF. The 7D has nearly 54k pixel/sq mm, the E-M5 nearly 71k and the 5D something above 24k. Between the 7D and the E-M5 there is no real difference in RAW, but somewhere between the 54k and the 24k there is a peak, where bigger pixels make a better picture. Similar to a gaussian distribution. Even the 80-MP-back fron Phase One has less than 37k.

I read some scientific articles about that phenomenon, the only thing I remember, was that on a specific size the single sensors interact due to micro reflection and refraction.

As technology enhances, the gaussian distribution may move and yes, there will be a time where future small-pixel-sensors will top the quality of older big-pixel-sensors, but not at the moment.

>>> This is true and that’s why I think that the 5DS is stupid. You just can’t cram 50Mp into a measly 35mm sensor. And as a result, sensor performance suffers. The 5DS and the ancient E-M5 have exactly the same sensor performance.

I think we have hit the optimal limit of sensor efficiency that the only factor affecting performance is pixel pitch. This is demnstrated by the similar performance of the K5 vs D810, E-M5 vs 5DS.

At first, your article wasn’t too academic, for me the conclusion was academic – in the meaning of practical not so relevant. Sorry, I’m not a native speaker. Maybe I took a phrase that is not common in english language.

We’re getting to the core. 😉

You claim shallow DOF being a problem, I am claiming it a creative possibility, that I don’t want to miss.

At one point I have to appologize. M43 has half the sensor sitze of FF, so you have two stops more light, working with the same DOF.

I was trappend in my thoughts, that one stop will give the double amount of light, forgetting the geometric progression, that gives us four times the light. 😉

That smaller lenses are much easier to produce is a fact too. High quality glass is difficult to make, especially in bigger size and that will not change in the next years, as it hasn’t changed (so) much since the days of Abbe, Schott and Rudolph.

You can reduce the effect of vignetting and lower edge clarity, but not eliminate. It is still there, if you use a MF lens on m43, but it is very hard to measure. The proportion of glass thickness to production tolerance gives the bigger lenses advantages.

The sharp pictures of iPhone and point-and-shoot cams are more a result of high invasive picture processing with contrast optimization than optical quality. 😉

I heard about a POC using many pinholes instead of glass lenses and computing the resulting cloud of images to a single image. That’s a way into future, like light-field photography is. Probably the days of Bayer-pattern sensors are also counted.

At first, your article wasn’t too academic, for me the conclusion was academic – in the meaning of practical not so relevant. Sorry, I’m not a native speaker. Maybe I took a phrase that is not common in english language.

We’re getting to the core.😉

You claim shallow DOF being a problem, I am claiming it a creative possibility, that I don’t want to miss.

>>> And you think that m43 is not capable of such creative possibilities?! Full frame has no monopoly on shallow DoF. If creative DoF is what you want, then what constitutes “shallow enough” becomes a subjective matter.

At one point I have to appologize. M43 has half the sensor sitze of FF, so you have two stops more light, working with the same DOF.

>>> Better check your facts again. APS-C is half the full frame. M43 is only a quarter. Half a sensor captures half the light so that’s one stop. M43 then has two stops less than FF. You are still very confused with very basic photography.

I was trappend in my thoughts, that one stop will give the double amount of light, forgetting the geometric progression, that gives us four times the light.😉

>>> And still you haven’t got it right

That smaller lenses are much easier to produce is a fact too. High quality glass is difficult to make, especially in bigger size and that will not change in the next years, as it hasn’t changed (so) much since the days of Abbe, Schott and Rudolph.

You can reduce the effect of vignetting and lower edge clarity, but not eliminate. It is still there, if you use a MF lens on m43, but it is very hard to measure. The proportion of glass thickness to production tolerance gives the bigger lenses advantages.

The sharp pictures of iPhone and point-and-shoot cams are more a result of high invasive picture processing with contrast optimization than optical quality.😉

>>> Where are you getting these “facts”?! Do you know that the iPhone or a lot of P&S cameras are capable of shooting RAW? It’s not about in-camera processing. It’s lens design. Smaller lenses are easier and cheaper to make. M43 lenses are one of the best in the industry. Yes, vignetting is there but very minimal. It’s not as pronounced as in full frame. I really don’t see your point and you remain very wrong in your claim that the same amount of lens issues exist in FF and m43. That is totally baseless.

I heard about a POC using many pinholes instead of glass lenses and computing the resulting cloud of images to a single image. That’s a way into future, like light-field photography is. Probably the days of Bayer-pattern sensors are also counted.

>>> Haven’t heard of this and it’s irrelevant to this discussion.

Ok, I get it. Twice.

First, the sensor size of m43 is quarter FF, right (that’s why 2 stops), crop factor is 2.

Second, you want to defend your theme small sensor = good sensor and big sensor = useless = bad sensor at all cost.

There are production tolerances, cutting or molding glass for lenses. If you have a lens that is 1 cm thick and another that is only 1 mm and both have a tolerance of 0.1 µm, that in relation the difference is one decimal power higher.

I’ve seen RAW from some smart phones and what I’ve seen was way beyond the quality of my first Digital Rebel.

But let’s end that discussion. You have your point of view and I have mine – and both we think the other one is not right at all.

“Second, you want to defend your theme small sensor = good sensor and big sensor = useless = bad sensor at all cost.”

Never said that. I own m43, aps-c, full frame and medium format cameras. I gain nothing by bashing full frame. I’m not bashing full frame. I’m bashing equivalence.

And as per your first sentence:

You made my day 🙂

>Try enlarging the bigger image by exactly the same proportion and you will see EXACTLY the same noise as that of the enlarged tiny sensor.

Noise in absolute sense (ie how it’s measured etc.) is not the same thing as perceived noise. The former is independent of enlargement or even display at all and is the relevant thing in this topic. The latter depends on how big you print and is not relevant unless you want to say how printing big makes noise more apparent – well, doh, it does, but what’s the point to repeating that point over and over again and ignoring evidence that there are other things as well which influence noise.

Please see: https://aberration43mm.files.wordpress.com/2015/02/compared.png

>So, did the larger image suddenly lose some light by enlarging?

You’re confusing apparent noise and noise. Enlarging influences how much we see noise.

But if you print the SAME OUTPUT SIZE, then the more total light is captured, the less noise you will see. WHY do you insist on enlarging by the same proportion is beyond me. If you want to print a A4-sized output, you print A4-sized output regardless of sensor size.

>And if print size is indeed a gauge of total light

It’s not and no one has said it it. You’re confusing noise as phenom and how noise manifests itself in a print. Why not just look at this and then think a while: https://aberration43mm.files.wordpress.com/2015/02/compared.png

>then what happens if you print at a smaller size? Will the smaller print become brighter?

Brighntness is a function of processing, not a functioin of how much light there is.

>Will it overexpose?

Exposure is a function of ambient light, exposure time and f-number. How bright you print the image is absolutely irrelevant of exposure.

>Where does all the extra light go when you downsize? ROFL!!!

Brightness of output is only a matter of processing. ROLF indeed.

>Can you see your broken logic? If you still don’t understand please leave. I have no intention of educating a brainwashed mind.

So when evidence contradicts your worldview the evidence will be ignored? Please explain https://aberration43mm.files.wordpress.com/2015/02/compared.png – how can your worldview explain it?

“Brighntness is a function of processing, not a functioin of how much light there is.”

Of all the stupid things you’ve said, this has got to be the most stupid and the funniest. Are you saying that exposure does not control how bright or how dark an image becomes? Are you saying that a completely dark image with ZERO light can be processed so it would appear bright? Am I talking to a complete idiot?

>Try enlarging the bigger image by exactly the same proportion and you will see EXACTLY the same noise as that of the enlarged tiny sensor.

Noise in absolute sense (ie how it’s measured etc.) is not the same thing as perceived noise. The former is independent of enlargement or even display at all and is the relevant thing in this topic. The latter depends on how big you print and is not relevant unless you want to say how printing big makes noise more apparent – well, doh, it does, but what’s the point to repeating that point over and over again and ignoring evidence that there are other things as well which influence noise.

Please see: https://aberration43mm.files.wordpress.com/2015/02/compared.png

>So, did the larger image suddenly lose some light by enlarging?

You’re confusing apparent noise and noise. Enlarging influences how much we see noise.

But if you print the SAME OUTPUT SIZE, then the more total light is captured, the less noise you will see. WHY do you insist on enlarging by the same proportion is beyond me. If you want to print a A4-sized output, you print A4-sized output regardless of sensor size.

>And if print size is indeed a gauge of total light

It’s not and no one has said it it. You’re confusing noise as phenom and how noise manifests itself in a print.

>then what happens if you print at a smaller size? Will the smaller print become brighter?

Brighntness is a function of processing, not a functioin of how much light there is.

>Will it overexpose?

Exposure is a function of ambient light, exposure time and f-number. How bright you print the image is absolutely irrelevant of exposure.

>Where does all the extra light go when you downsize? ROFL!!!

Brightness of output is only a matter of processing. ROLF indeed.

>Can you see your broken logic? If you still don’t understand please leave. I have no intention of educating a brainwashed mind.

So when evidence contradicts your worldview the evidence will be ignored? Please explain https://aberration43mm.files.wordpress.com/2015/02/compared.png – how can your worldview explain it?

Why not discuss in a public forum? Would be much clearer. Too much of a coward to do that? 😉

>>> This is true and that’s why I think that the 5DS is stupid. You just can’t cram 50Mp into a measly 35mm sensor. And as a result, sensor performance suffers. The 5DS and the ancient E-M5 have exactly the same sensor performance.

Of course every sensor designer on this planet disagrees with your idea of pixel limit and all the image sensor performance tests show the the 5DS has far more potential than E-M5.

>>>I think we have hit the optimal limit of sensor efficiency that the only factor affecting performance is pixel pitch.

Except that readout noises keep going down and quantum efficiency keeps going up, crosstalk keeps going down, banding, PRNU etc. improve.

>>> This is demnstrated by the similar performance of the K5 vs D810, E-M5 vs 5DS.

Except that in both those paits the bigger sensor camera beats the smaller sensor camera easily when it comes to image quality. IGNORING UNCOMFORTABLE EVIDECE DOES NOT MAKE IT GO AWAY. ROLF.

>>> I’m bashing equivalence.

Which is something you obviously don’t understand at all.

Explain this: https://aberration43mm.files.wordpress.com/2015/02/compared.png

YOU CAN’T, THUS YOU IGNORE IT. ROLF.

Mark, you’re wrong about the lenses. The smartphone leses are absolutely stunning in performance (just check out the MTFs). But they have to be stunning to perform at all – that’s because the images smartphone lenses draw have to be enlarged a lot more than those from for example full frame cameras. And the more you enlarge, the more you enlarge the optical deficiencies. That’s why for exampe large format lenses are very simple and not too good – they don’t have to be.

The quality of RAW data of the shart phones is low because of the small amount of light captured, not because of the optics. You’ll get similar low quality RAW data from any camera if you collect similar amount of total light.